Ookla gets punchy with its UK testing rivals over 5G speed claims

Dr. Brian Connelly, head of the data Science team at Ookla, has blasted the poor data, bad methodology, and questionable 5G identification that competitors are using.

Get up to speed with 5G, and discover the latest deals, news, and insight!

You are now subscribed

Your newsletter sign-up was successful

In a post that’s guaranteed to raise eyebrows within the world of mobile networking, Ookla’s head of the data Science, Dr. Brian Connelly, has taken a swipe at the methodology and data being used by testing companies in the UK to measure 5G speeds.

The article, titled ‘5G Claims Gone Wrong: The Dangers of Bad Data’, runs through recent studies - completed by the likes of nPerf, Opensignal, umlaut, and Rootmetrics - and cites a number of issues with how these companies come to a conclusion on the state of 5G provision in a given area.

"Squandering marketing budgets to promote claims based on unsound data or unsound methodologies helps no one."

Dr. Brian Connelly, Ookla.

“Squandering marketing budgets to promote claims based on unsound data or unsound methodologies helps no one — not the customer, the operator or even the company providing the unsound claim,” Connelly writes. “When Ookla stands behind a claim of “fastest” or “best” network in a country, we do so only when a strict set of conditions has been met. Being a trusted source of consumer information was our first function and it remains the driver behind our mission.”

Poor methodology

In his post, Connelly explains how Opensignal’s methodology allows for a blend of testing methods including background tests, download size-capped tests and foreground customer-initiated tests with low usage rates. But claims that the company’s public reporting “consistently makes no distinction between results derived from these differing methodologies and presents them as a simple national average”.

“This mix of methodologies introduces the possibility that any reported differences in download speed among operators may be due to differences in testing and not due to differences in the actual services that they provide,” Connelly explains.

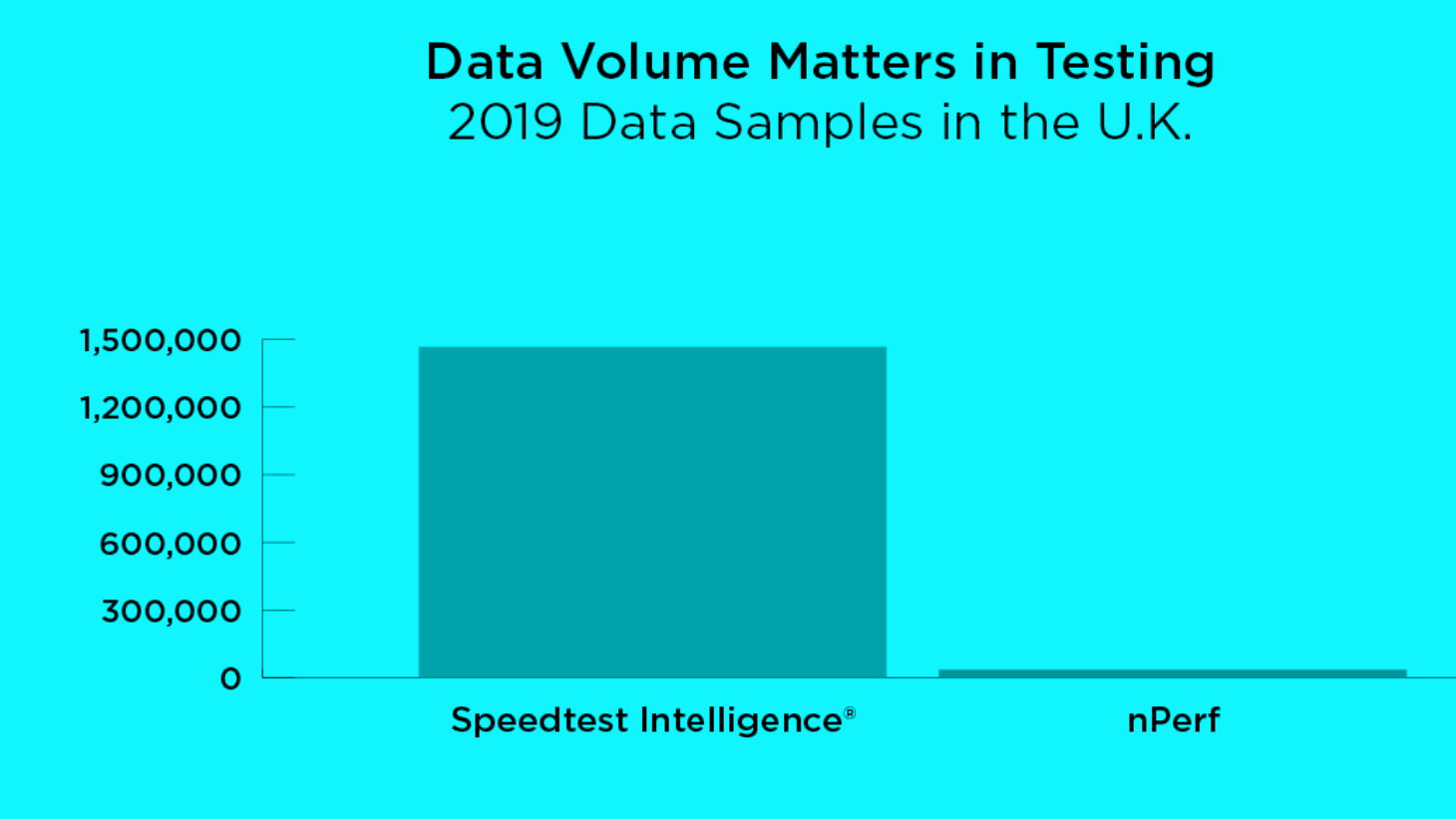

Connelly also takes aim at companies using a limited amount of data when delivering headline-grabbing statements about 5G. Connelly points out that a recent nPerf report was based on 36,000 samples, taken over a year, and says it simply isn’t enough.

"It took Ookla less than three days to collect the same amount of data that nPerf collected in a year."

Dr. Brian Connelly, Ookla.

“For comparison, Ookla collects over 15,000 tests every day in the UK,” Connelly says. “It took Ookla less than three days to collect the same amount of data that nPerf collected in a year. Generally speaking, lower sample counts tend to produce results with much less statistical certainty.”

Get up to speed with 5G, and discover the latest deals, news, and insight!

According to Connelly, the report from nPerf resulted in “wildly different” results to other testing companies, which he said was unsurprising, “given their limited sample size”.

However, in a response to the Ookla post, nPerf's head of media relations, Raul Sanchez, contacted 5Gradar to explain that nPerf does not produce the same volume of tests, but with good reason. Sanchez gave the following three points of what they perform fewer tests than Ookla:

- "There is no point in having millions of tests per month to have a representative panel."

- "nPerf operates a drastic filtering, aiming to eliminate all the biases of an over-representation of one or more devices."

- "nPerf measures additional QoE KPIs that cause the podium to be different from that established by Ookla."

Sanchez was also at pains to point out that nPerf does not rely solely on third-party CDNs, which Connelly had flagged up in his criticism of testing methodology.

"The first point [in the post] is totally false concerning nPerf and servers; we have a policy similar to Ookla with the advantage that we manage almost half of the servers that we offer ourselves (more than 1100 servers worldwide), Sanchez told us. "And we also offer total transparency on the max bandwidth of the servers, which Ookla doesn't do.

"Finally, we cannot compare the results of Ookla with ours, since we do not measure the same thing. Our speed and latency results are weighted by streaming and browsing tests, something Ookla does not do."

3 is the magic number

Elsewhere in his post, Connelly discusses the methodology of driving pre-configured test equipment on pre-configured routes, which results in lab-like conditions, and has been a staple of network reporting for a long time, and is used by companies such as umlaut and Rootmetrics.

“Narrow measurements taken from a small number of devices, in cars, in a test environment, on a small number of routes over a small time period do not provide a sufficient sample to underpin the claim of “fastest 5G” in one of the world’s foremost cities,” Connelly explains.

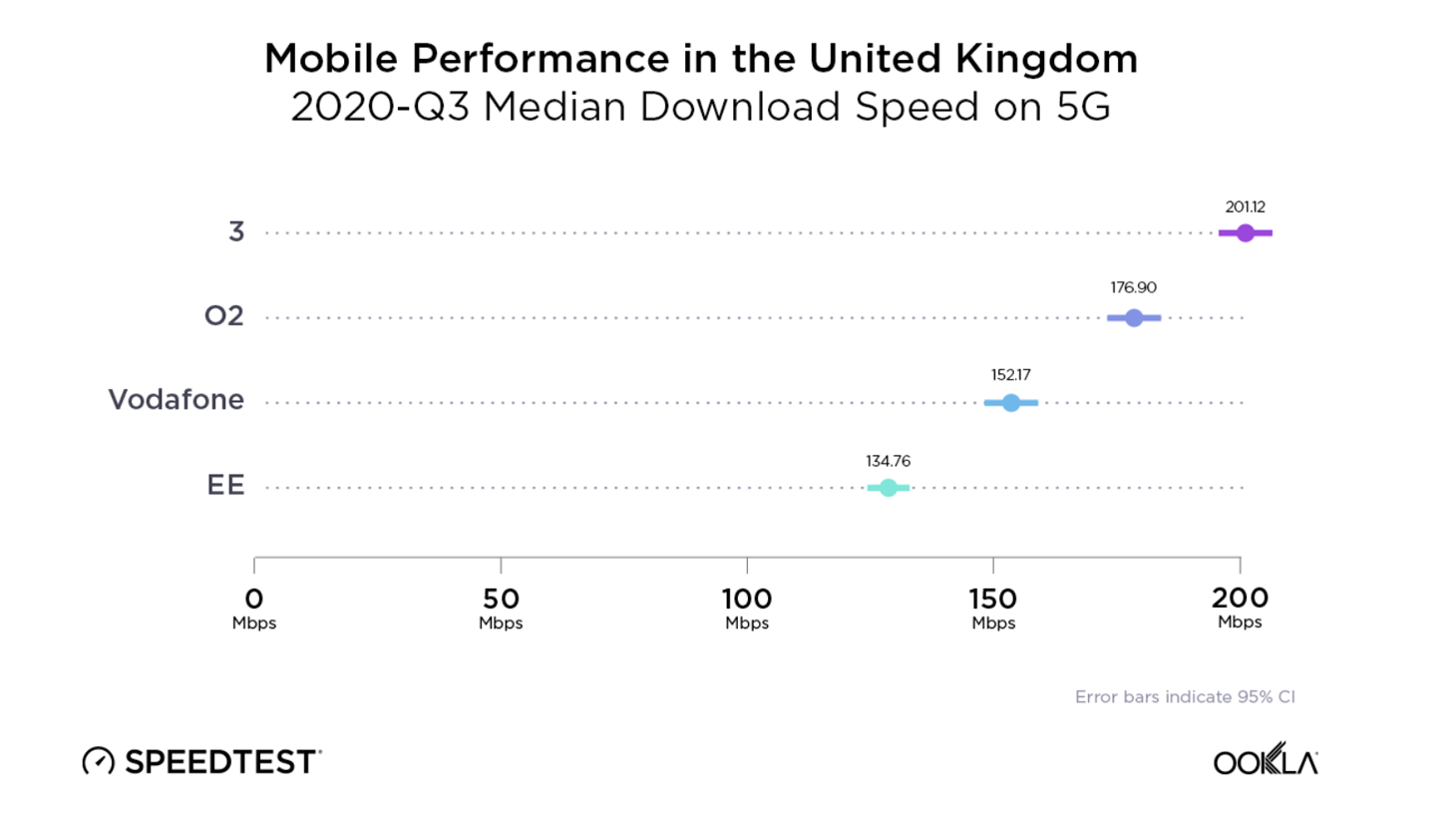

Given this criticism of the studies currently taking place in the UK to measure 5G’s performance, it should come as no surprise that Ookla’s own testing has highlighted some surprising results.

“As we found in our recent U.K. market analysis, the 3 network has by far the fastest median download speed over 5G in the UK,” Connelly says.

“This analysis is based on over 60,000 Speedtest results taken over 5G by more than 16,000 devices in the U.K. during Q3 2020. In all, there were over 500,000 samples of Speedtest results in the U.K during Q3 2020. EE shows the highest Time Spent on 5G by 5G-capable devices at 10.9%, with 3 coming in at a distant second and Vodafone third,” Connelly concludes.

Updated: This post has been updated, with a response from nPerf, one of the companies mentioned in Connelly's blog post.

- The best 5G networks in the UK and US

- Why 5G small cells are vital for mmWave 5G

- Millimeter wave: the secret sauce behind 5G

- Get updates on the hottest 5G stocks

- We reveal the latest 5G use cases

- Discover the truth behind 5G dangers

- 5G towers: everything you need to know

Dan is a British journalist with 20 years of experience in the design and tech sectors, producing content for the likes of Microsoft, Adobe, Dell and The Sunday Times. In 2012 he helped launch the world's number one design blog, Creative Bloq. Dan is now editor-in-chief at 5Gradar, where he oversees news, insight and reviews, providing an invaluable resource for anyone looking to stay up-to-date with the key issues facing 5G.